Using SetContext to efficiently solve NLPs

One of the many complications that arise when solving non-linear optimization problems (NLPs) is that the presence of extrinsic indexes may introduce computational inefficiencies when array results within your model are indexed by the extrinsic dimensions, even though any single optimization needs only a single slice of the result. What happens is that while one NLP is being solved, array results are being computed and propagated within the internals of your model, where only a single slice of the result is actually used by the NLP being solved. Even though it may have required substantial computation to obtain, the remainder of the array ends up being thrown away without being used.

The «SetContext» parameter of DefineOptimization provides a way to avoid this inefficiency.

The inefficiency only arises when you are solving NLPs, so if your problem is linear or quadratic, there is no need to make use of «SetContext». Also, it only arises when extrinsic indexes are present -- in other words, when DefineOptimization found that extra indexes were present in the objective or constraints and thus array-abstracted over these extra (extrinsic) indexes, returning an array of optimization problems. Thus, if you are dealing with a single NLP, once again there is no need to worry about this. One situation where it does arise naturally is the case where you are performing an optimization that depends on uncertain quantities, such that in the uncertainty mode you end up with a Monte Carlo sample of NLPs. If you don't utilize «SetContext» appropriately, the time-complexity of your model will scale quadratically with sample size rather than linearly.

Inefficiency Example

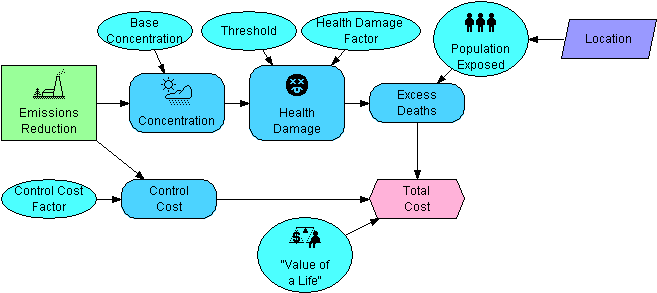

Consider the following optimization based on the model depicted above:

DefineOptimization( decision: Emissions_reduction, Maximize: Total_cost )

For a given Emissions_reduction, the computed mid value for Total_cost is indexed by Location. Location becomes an extrinsic index, i.e., DefineOptimization is array-abstracted across the Location index and returns an array of optimizations, with a separate optimization for each location. With a separate NLP for each location, the solution find the optimal emissions reduction for each region. When solving this, each of the optimizations is solved separately.

Now, consider what computation actually takes place as each NLP is being solved. First, the NLP corresponding to @Location=1 is solved. The way an NLP is solved is that the solver engine proposes a candidate solution, in this case a possible value for Emissions_reduction. This value is "plugged in" as the value for Emissions_reduction and Total_cost is then evaluated. During that evaluation, Population_exposed, Excess_deaths and Total_cost are each computed and each result is indexed by Location. At this point, Total_cost[@Location=1] is extracted and total cost for the remaining regions is ignored (since the NLP for @Location=1 is being solved). The search continues, plugging a new value into Emissions_reduction and re-evaluating Total_cost, each time all the variables between Emissions_reduction and Total_cost are re-evaluated, and each time Excess_deaths and Total_cost needlessly compute their values for all locations, when in reality only the @Location=1 value ends up being used.

During the search for the solution, Population_exposed is only computed a single time. Since it is not downstream from the decision variable, its initial value is never invalidated, so for Population_exposed, there is actually no wasted computation. Our concern with inefficiency in this example lies solely with Excess_deaths and Total_cost<cost>.

How SetContext Works

Enable comment auto-refresher