Using SetContext to efficiently solve NLPs

One of the many complications that arise when solving non-linear optimization problems (NLPs) is that the presence of extrinsic indexes may introduce computational inefficiencies when array results within your model are indexed by the extrinsic dimensions, even though any single optimization needs only a single slice of the result. What happens is that while one NLP is being solved, array results are being computed and propagated within the internals of your model, where only a single slice of the result is actually used by the NLP being solved. Even though it may have required substantial computation to obtain, the remainder of the array ends up being thrown away without being used.

The «SetContext» parameter of DefineOptimization provides a way to avoid this inefficiency.

The inefficiency only arises when you are solving NLPs, so if your problem is linear or quadratic, there is no need to make use of «SetContext». Also, it only arises when extrinsic indexes are present -- in other words, when DefineOptimization found that extra indexes were present in the objective or constraints and thus array-abstracted over these extra (extrinsic) indexes, returning an array of optimization problems. Thus, if you are dealing with a single NLP, once again there is no need to worry about this. One situation where it does arise naturally is the case where you are performing an optimization that depends on uncertain quantities, such that in the uncertainty mode you end up with a Monte Carlo sample of NLPs. If you don't utilize «SetContext» appropriately, the time-complexity of your model will scale quadratically with sample size rather than linearly.

Inefficiency Example

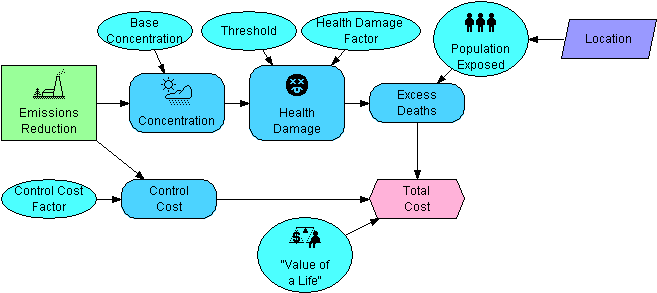

Consider the following optimization based on the model depicted above:

DefineOptimization(decision: Emissions_reduction, Maximize: Total_cost)

For a given Emissions_reduction, the computed mid value for Total_cost is indexed by Location. Location becomes an extrinsic index, i.e., DefineOptimization is array-abstracted across the Location index and returns an array of optimizations. With a separate NLP for each location, the solution finds the optimal emissions reduction for each region separately.

Now, consider what computation actually takes place as each NLP is being solved. First, the NLP corresponding to @Location = 1 is solved. The way an NLP is solved is that the solver engine proposes a candidate solution, in this case a possible value for Emissions_reduction. This value is "plugged in" as the value for Emissions_reduction and Total_cost is then evaluated. During that evaluation, Population_exposed, Excess_deaths and Total_cost are each computed and each result is indexed by Location. At this point, Total_cost[@Location = 1] is extracted and total cost for the remaining regions is ignored (since the NLP for @Location = 1 is being solved). The search continues, plugging a new value into Emissions_reduction and re-evaluating Total_cost, each time all the variables between Emissions_reduction and Total_cost are re-evaluated, and each time Excess_deaths and Total_cost needlessly compute their values for all locations, when in reality only the @Location=1 value ends up being used.

Resolving inefficiency with SetContext

The «SetContext» parameter of DefineOptimization provides the mechanism that allows us to avoid the inefficiency described above.

In your call to DefineOptimization, you may optionally list a set of context variables. When the solution process for each NLP begins, each context array is first computed and then sliced along all the extrinsic indexes, extracting the particular slice required by the NLP.

In this example we observed that Excess_deaths and Total_cost contain the extrinsic index Location. But this is due to the influence of Population_exposed which is not downstream of the decision node. Setting context on an array limits its computation to the slice required by the optimization. This will also reduce the dimensionality of dependent nodes. Setting context on Population_exposed will eliminate the Location index from Excess_deaths and Total_cost without altering the iteration dynamics.

DefineOptimization(decision: Emissions_reduction, Maximize: Total_cost,SetContext: Population_exposed

The section Selecting Context Variables below discusses which variables are appropriate or are bad choices for «SetContext».

More inefficiency with the Monte Carlo

Next, suppose we solve the optimization from an uncertainty view. Here, for each random scenario of the uncertain variables, we'll solve a separate optimization. Hence, Run joins Location as an extrinsic index -- we have an array of optimizations, indexed by Location and Run.

When these are solved, each NLP in this 2-D array is solved separately. So, the NLP at [@Location = 1, @Run = 1] is solved first, with the optimizer engine re-evaluating the model at several candidate Emission_reduction values until it is satisfied that it has converged to the optimal reduction level. Then the NLP at [@Location = 1, @Run = 2] is solved in the same fashion, etc.

In this model, every variable with the exception of our decision, Emissions_reduction, and the index Location, has uncertainty. So when one particular NLP is actively being solved, a Monte Carlo sample is being computed for each variable in the model, resulting finally in a 2-D Monte Carlo sample for Total_cost. From this 2-D array, the single cell corresponding to the coordinate of the active NLP is extracted and used, the remaining values wasted.

In an ideal world, we would expect the solution time to scale linearly with the number of locations and linearly with the sample size. With the inefficiencies just described, the complexity to solve each individual NLP scales linearly with number of locations and linearly with sample size, but since the number of NLPs also scales linearly with these, our net solution time scales quadratically with each. Hence, if you double the number of locations, your model takes 4 times longer to find the optimal solution. If you increase your sample size from 100 to 1000, it suddenly takes 100 times longer (not 10-times longer) to solve.

Applying SetContext to uncertain variables

To avoid inefficiencies from the Run index, we could list additional context variables:

DefineOptimization(decision: Emissions_reduction, Maximize: Total_cost,SetContext: Base_concentration, Threshold, Health_damage_factor,Population_exposed, Control_cost_factor, value_of_a_life)

Compare the context variables selected here with the diagram. Do any of these depend directly or indirectly on the decision variable? Can you explain why this set of context variables was selected?

Selecting Context Variables

Which variable or variables should you select to use as a context variable? Here are some guidelines.

No downstream operations on extrinsic indexes

When you select X to be a context variable, it is essential that no variable depending on X operates over an extrinsic index. The presence of such a variable would alter the logic of your model and produce incorrect results! For example, if Y is defined as Sum(X, Location), where Location is extrinsic, then you should not select X as a context variable.

Suppose there are two extrinsic indexes, I and J, and you know there are no downstream variables operating on I, but you can't say the same about J. You'd like to select X as a context variable for only the I index. What can you do? In this case, you can accomplish this with a slight modification to your model, introducing one new variable and modifying the definition of X slightly:

Variable I_ctx := IVariable X := («origDefinition»)[I = I_ctx]

Then you list I_ctx in the «SetContext» parameter.

Not downstream from a decision variable

Ideally, a context variable should not be downstream of a decision variable (i.e., it should not be influenced, directly or indirectly, by a decision variable's value).

Variables downstream from decision variables are invalidated every time a new search point is plugged it, and thus must be recomputed every time. By selecting variables that are not downstream (as was done in the above example), the sliced value gets cached once for each NLP solved.

Context variables that are downstream from decisions are handled somewhat differently by «SetContext». Instead of replacing the value with the appropriate slice, the pared definition of the variable is temporarily surrounded by a Slice operation. So, for example, if Control_cost is specified as a decision variable, its parsed definition would become (for the duration of the NLP solve):

Slice(Slice(-Cost_factor*(Logten(1 - Reduction)), Location, 1), Run, 1)

The original definition is underlined to illustrate the modification. This is not as efficient as replacing the value by its slice once. Here, the original underlined definition must be evaluated at each search point, resulting in an array, which then slices out the cell of interest, which remains wasteful.

If you are willing to make minor modifications to your model, it should never be necessary to select a context variable downstream from a decision. If you are tempted to do so, you can always use this trick:

Variable I_ctx := IVariable X := («origDefinition»)[I = I_ctx]

with

DefineOptimization(..., SetContext: I_ctx....).

Do not use the Extrinsic Index itself

It may seem like the extrinsic index variable itself is the obvious context variable (e.g., Location in the above example). However, that is a dangerous choice. Indexes are often used as table indexes -- tables expect their table indexes to be real indexes. Changing a table index to a single value will break any tables that use it. Furthermore, there are likely to be other points in your model that operate over the index in various ways. The Run index is especially troublesome, since all distributions and statistical functions implicitly operate over it, so you should never use Run.

Only the maximally downstream context

You may have an entire submodel with hundreds of variables, none of which is downstream of any variable being optimized. The result of this submodel is used by a variable that is downstream from decision variables. In this case, only the final result variable from the submodule needs to be listed in «SetContext». While other variables within the submodule could also be listed, there is no reason to do so, since the context is captured in the submodules final result variable, fully accomplishing its goal of preventing the indexes from unnecessarily entering the computations within the optimization search.

Do use Parametric Choice variables

It is very common for Analytica models to have Choice inputs, where you can select a single value or select "All" to compare results over multiple scenarios. In a purely parametric input like this, the model never operates over the index -- it essentially computes each "scenario" separately.

When such an input exists, and the user selects "All", the input becomes an index, and appears as an extrinsic index for your optimization -- i.e., you end up with a separate optimization for each scenario. The efficiency issues discussed on this page then come into play.

A parametric choice input is a perfect candidate for a context variable. By definition, your model already works as expected when a single value is selected, so there is no worry about downstream operations over the extrinsic index, and it is also known not to depend on a decision variable. It avoids the gotchas that you may need to worry about if you try to be more clever.

There is no harm in listing Parametric choice variables even when they are set to a single value. «SetContext» will have no impact in those cases, but will kick in and preserve efficiency when they get changed to "All".

Special Uses

Although the primary purpose of «SetContext» is provide a mechanism for avoid the computational inefficiency of array-abstracted NLPs, there are some additional uses.

Tracking Active NLP when debugging

When debugging, you may need to focus on what is happening during a particular NLP. For example, perhaps you are displaying message boxes or a progress bar dialog and you want to only have it pop up when a particular NLP is active, or have it display information about which NLP is currently being solved. Using the «SetContext» parameter, you can easily track the coordinate of the currently active NLP.

To do this, just introduce a new context variable for each extrinsic index, e.g.:

Variable Location_ctx := LocationVariable Run_ctx := Run

Then you can debugging include code in nodes within your nodes within your model such as:

ShowProgressBar("Solving", "Location="&Location_ctx&", Run="&Run_ctx, (Run_ctx - 1)/sample_size)

or

If Location='Los Angeles' Then MsgBox(Excess_deaths)

Enable comment auto-refresher