Tutorial on using pre-trained OpenAI language models

Under construction -- no ready to be used yet

In this tutorial you will learn how to use language models (via the OpenAI API) from your Analytica model, and also learn about language models and how they work.

Requirements

To use this library, you must have:

- An Analytica Enterprise or Analytica Optimizer edition, release 6.3 or higher.

- You own OpenAI API key.

To get an OpenAI API key

- Go to https://platform.openai.com/ and sign up for an account.

- Click on your profile picture and select View API keys

- Click Create new secret key.

- Copy this key to the clipboard (or otherwise save it)

Getting started

- Download the library to your

"C:\Program Files\Lumina\Analytica 4.6\Librariesfolder. - Launch Analytica

- Start a new model.

- Select File / Add Library...., select

OpenAI API library.ana[OK], select Link [OK]. - Navigate into the OpenAI API lib library module.

- Press either Save API key in env var or Save API key with your model. Read the text on that page to understand the difference.

- A message box appears asking you to enter your API key. Paste it into the box to continue.

At this point, you should be able to call the API, and you won't need to re-enter your key each time. View the result of Available models to test whether the connection to OpenAI is working. This shows you the list of OpenAI models that you have access to.

What OpenAI models are available to use?

To view the list of OpenAI models that you have access to, navigate into the diagram for the OpenAI API Library and evaluate Available Models" (identifier: OAL_Avail_Models). You may see many of the following (this list changes over time):

Base Models:

- ada

- babbage

- curie

- davinci

- gpt-3.5-turbo

- whisper-1

Code Search Models:

- ada-code-search-code

- ada-code-search-text

- babbage-code-search-code

- babbage-code-search-text

- code-search-ada-code-001

- code-search-ada-text-001

- code-search-babbage-code-001

- code-search-babbage-text-001

Search Document Models:

- ada-search-document

- babbage-search-document

- curie-search-document

- davinci-search-document

- text-search-ada-doc-001

- text-search-babbage-doc-001

- text-search-curie-doc-001

- text-search-davinci-doc-001

Search Query Models:

- ada-search-query

- babbage-search-query

- curie-search-query

- davinci-search-query

- text-search-ada-query-001

- text-search-babbage-query-001

- text-search-curie-query-001

- text-search-davinci-query-001

Similarity Models:

- ada-similarity

- babbage-similarity

- curie-similarity

- davinci-similarity

- text-similarity-ada-001

- text-similarity-babbage-001

- text-similarity-curie-001

- text-similarity-davinci-001

Instruction Models:

- curie-instruct-beta

- davinci-instruct-beta

Edit Models:

- code-davinci-edit-001

- text-davinci-edit-001

Text Models:

- text-ada-001

- text-babbage-001

- text-curie-001

- text-davinci-001

- text-davinci-002

- text-davinci-003

- text-embedding-ada-002

Turbo Models:

- gpt-3.5-turbo-0301

- gpt-3.5-turbo-0613

- gpt-3.5-turbo-16k

- gpt-3.5-turbo-16k-0613

GPT-4 models:

- gpt-4

- gpt-4-0314

- gpt-4-0613

Generating text completions

In this segment, we will explore and test the capabilities of text generation. You'll have the opportunity to see how the model generates text based on a given prompt and learn how to manipulate various parameters to control the generation process.

Now you'll construct a model capable of generating text sequences, with the specific characteristics of these sequences being determined by the parameters you define. Here's how you do it:

- Create an Index Node for Completions: Begin by constructing an Index node and name it "Completion number". This node plays an important role in your model, as it dictates the number of different text completions your model will generate. Inside the nodes definition you'll enter the following:

1..4

- Set Up a Variable Node for Prompt Generation: Next, establish a variable node and title it "Example of prompt generation". Inside this nodes definition, you'll input the following command:

Prompt_completion("In the night sky, ", Completion_index:Completion_number, max_tokens:100)

This command instructs the model to create text that continues from the prompt "There once was a boy". The parameter "Completion_number" controls the number of unique completions the model will generate. The max_tokens:100 limits each text output to approximately 100 tokens in length.

This configuration provides a playground for you to experiment with your model, modify the parameters, and observe how changes affect text generation. Through this interactive learning process, you'll gain deeper insights into how to navigate your model effectively to generate the desired results.

Word likelihoods

(tutorial about next token probabilities. logits and perplexity, etc.)

Language models work by receiving an input sequence and then assigning a score, or log probability, to each potential subsequent word, called a token, from its available vocabulary. The higher the score, the more the model believes that a particular token is a fitting successor to the input sequence.

In this part of our discussion, we're going to unpack these token probabilities further. We're seeking to understand why the model gives some tokens a higher score, suggesting they are more likely, while others receive a lower score, indicating they are less likely.

To bring this into perspective, let's think about the phrase, "The dog is barking". It's logical to expect the word "barking" to get a high score because it completes the sentence well. But, if we switch "barking" with a misspelled word such as "barkeing", the model's score for this token would drop, reflecting its recognition of the spelling mistake. In the same vein, if we replace "barking" with an unconnected word like "piano", the model would give it a much lower score compared to "barking". This is because "piano" is not a logical ending to the sentence "The dog is".

Controlling completion randomness

When generating text, you might want to control how random or predictable the output is. Two parameters that can help you with this are 'temperature' and 'top_p'. Let's explore how they work:

- Temperature: The temperature parameter, which ranges from 0 to 2, influences the randomness of the output. Higher values, such as 1.1, yield more diverse but potentially less coherent text, while lower values, such as 0.7, result in more focused and deterministic outputs.

- Top_p: ranging from 0 to 1, the top_p parameter operates by selecting the most probable tokens based on their cumulative probability until the total probability mass surpasses a predefined threshold (p). For instance, a lower value, like 0.2, would make the output more uniform due to fewer options for the next word.

Here's how we can apply temperature in practice:

- Change the "Example of prompt generation" node definition:

Prompt_completion("In the night sky, ", temperature:temperature_value, Completion_index:Completion_number, max_tokens:40)

- Create a variable node, titled "temperature_value" and assign its definition a number between 0-2. An example of what the results look like if you set temperature to 1.1 is shown below:

Here's how we can apply top_p in practice:

- Change the "Example of prompt generation" node definition:

Prompt_completion("In the night sky, ", top_p:top_p_value, Completion_index:Completion_number, max_tokens:40)

- Create a variable node, titled "top_p_value" and assign its definition a number between 0-1. An example of what the results look like if you set top_p to 0.1 is shown below:

By adjusting these parameters, you can explore the different ways in which text generation can be fine-tuned to suit your specific needs. Whether you want more creative and diverse text or more focused and uniform text, these controls provide the flexibility to achieve the desired results.

In-context learning

(What is in-context learning?) (Some examples: Translation, ....)

Getting the desired task with a pre-trained language model can be quite challenging. While these models possess vast knowledge, they might struggle to comprehend the specific task's format unless tuned or conditioned.

Therefore, it is common to write prompts that tell the language model what the format of the task they are supposed to be accomplishing. This method is called in-context learning. In the case where the provided prompt contains several examples of the task that the model is supposed to accomplish, it is known as “few-shot learning,” since the model is learning perform the task based on a few examples.

Creating a typical prompt often has two essential parts:

- Instructions: This component serves as a set of guidelines, instructing the model on how to approach and accomplish the given task. For certain models like OpenAI's text-davinci-003, which are fine-tuned to follow specific instructions, the instruction string becomes even more crucial.

- Demonstrations: These demonstration strings provide concrete examples of successfully completing the task at hand. By presenting the model with these instances, it gains valuable insights to adapt and generalize its understanding.

For example, suppose we want to design a prompt for the task of translating words from English to Chinese. A prompt for this task could look like the following:

Translate English to Chinese. dog -> 狗 apple -> 苹果 coffee -> 咖啡 supermarket -> 超市 squirrel ->

Given this prompt, most large language models should answer with the correct answer: "松鼠"

Creating classifiers using in-context learning

What is a classifier?

A classifier is a type of system that language models can be utilized to build. While language models are known for their ability to generate text, they are also widely employed to create classification systems. These systems work by taking input in the form of text and assigning a corresponding label to it. Here are a few examples of such tasks:

- Classifying Tweets as either TOXIC or NOT_TOXIC.

- Determining whether restaurant reviews exhibit a POSITIVE or NEGATIVE sentiment.

- Identifying whether two statements AGREE with each other or CONTRADICT each other.

In essence, classifiers leverage the power of language models to make informed decisions and categorize text data based on predefined criteria.

Classifier Task 1: Sentiment analysis

Sentiment analysis is a classification task that classifies text as having either positive or negative sentiment. A raving review would have positive sentiment, while a complaint would have negative sentiment. In this task you will have an LLM classify Yelp reviews as having either positive or negative sentiment. You will implement zero-shot, one-shot and N-shot in-context learning and compare how they compare in performance. I.e., does it help to provide some examples in the prompt.

For this task you will use a subset of the Yelp reviews dataset from Kaggle. Follow these steps to import the data into your model:

- Add a module to your model for this task. Title it "Sentiment analysis".

- Enter the module.

- Download Yelp reviews dataset small.ana to a known location.

- Select File / Add Module..., and select the downloaded file. Click link.

The dataset contains separate training and test sets. The training set consists of these objects:

Index Yelp_train_IVariable Yelp_review_trainVariable Yelp_label_train

Similary, the test set consists of these object:

Index Yelp_test_IVariable Yelp_review_testVariable Yelp_label_test

Navigate into the module and take some time to view these to understand their organization.

Zero-shot prompting

In zero-shot learning, you provide zero examples of how you want the task done. You simply describe in the prompt what you want the LLM to do. Steps:

- Add these objects:

Index Test_I ::= 1..10Variable Test_reviews ::= Yelp_review_test[ Yelp_test_I = Test_I]Variable Test_labels ::= Yelp_label_test[ Yelp_test_I = Test_I]

This is the data you'll test performance on. Next, add a variable named Predicted_sentiment and define it as

Prompt_completion(f"Classify the sentiment of this yelp review as positive or negative: {test_reviews}")

Evaluate Predicted_sentiment to see how the LLM classifies each instance. You may see a substantial amount of inconsistency in the format of the resulting responses.

On the diagram select both Predicted_sentiment and Test_labels and press the result button to compare how it did compared to the "correct" labels as specified in the dataset.

In this screenshot, it is in 90% agreement. We will want to evaluate its accuracy over a larger test set, but when the responses from the LLM are so inconsistent, it makes it hard to automate. Two approaches would be to search for the words "positive" or "negative" in the LLM response, or to redesign the prompt to encourage greater consistency. Try the latter.

- Edit the definition of

Predicted_sentiment

Test yourself: Change the prompt text to obtain a consistent response format.

You will need to complete the challenge to proceed. You should end up with

The predicted sentiment might change from previously since the output of an LLM is stochastic. We are now in a position to measure the accuracy, which will then enable us to test it on a larger test set. Add

Objective Sentiment_accuracy ::= Average( (Predicted_sentiment="Positive") = Test_labels, Test_I )

Evaluate Sentiment_accuracy and set the number format to Percent. With the responses shown in the previous screenshot, the accuracy is 100%, but yours may be different. However, this was only measured over 10 instances. Increase the test set size by changing the Definition of Test_I to 1..50 and re-evaluate Accuracy. This is the accuracy with zero-shot prompting.

N-shot prompting

One-shot learning or one-shot prompting means that you include one example of how you want it to perform the task. 4-shot prompting would provide four examples. For some tasks, providing examples may improve accuracy. Does it improve accuracy for sentiment analysis? You will explore this question next.

Add:

Decision N_shot := MultiChoice(self,3)Domain of N_shot := 0..4

Reminder: To enter the expression 0..4 for the domain, select expr on the domain type pulldown.

For convenience, make an input node for N_Shot.

Create a variable to calculate the prompt examples

Variable Sentiment_examples::=

Local N[] := N_shot; LocalIndex I := Sequence(1,N,strict:true); JoinText( f" Review:{Yelp_review_train[Yelp_Train_I=I]} Sentiment: {If Yelp_label_train[Yelp_Train_I=I] Then "Positive" Else "Negative"} ", I)

and another variable named Sentiment_prompt to hold the full prompt, defined as

f"Classify the sentiment of this yelp review as positive or negative. Respond with only one word, either Positive or Negative.

{Sentiment_examples}

Review:{test_reviews}

Sentiment:"

Now update the definition of Predicted_sentiment to be Prompt_completion(Sentiment_prompt).

View the result for Sentiment_prompt. Here you can see the prompt sent to the LLM for each test case. Notice how it provides 2 examples (because N_shot=2) with every prompt, and then the third review is the one we want it to complete.

Try it yourself: Compute Sentiment_accuracy for different values of N_Shot. Do more examples improve accuracy? Do you think your conclusion generalizes to other tasks, or would it apply only to sentiment analysis?

(Classifier Task 2)

Managing a conversation

In this module, we will guide you through the process of building and managing your own chat user interface. This includes keeping a history of messages and roles. Follow these step-by-step instructions to set it up:

You'll begin by opening a blank model titled "Frontend" and adding the OpenAI API library. Next, you will be creating several nodes that will automatically update when the user enters text in specific prompts such as “Starting System prompt” and “Next user prompt.” These will be set up later. Now you want to create a module node titled "Backend." Within this module you'll add the following nodes:

Create an Index Node for the Chat index:

- Title: “Chat index”

- Type: List

- This will be the main index for the chat history.

Create a Variable Node for Role History:

- Title: “Role history”

- Type: Table, indexed by “Chat index”

- This will keep track of the roles within the chat.

Create a Variable Node for Message History:

- Title: “Message history”

- Type: Table, indexed by “Chat index”

- This will keep a record of the messages exchanged in the chat.

Create a Variable Node for the Most recent response:

- Title: “Most recent response”

- This node will be responsible for holding the most recent response in the chat

- Code Definition:

Chat_completion( Message_history, Role_history, Chat_index )

Create a Variable Node for the Total history:

- Title: Total history

- Type: Table, indexed by itself

- This node will be responsible for displaying the total conversation history

- Enter the following:

Create a decision node for the Starting System prompt:

- Title: "Starting System prompt"

- Type: Text only, onChange

- Definition: The definition is influenced by what the user enters

- onChange:

if IndexLength(Chat_index)>0 and MsgBox("Do you want to reset (clear) the conversation?",3,"Reset or append system

prompt?")=6 then

Chat_index := [];

Append_to_chat( Message_history, Role_history, Chat_index, Self, "system" );

Description of Resp_from_model := null

The code above represents what happens whenever you try to enter text for this variable. First, it checks if the Chat index is empty and asks the user if they want to reset the conversation. If the user enters yes, then the Chat index is set to empty, and then it takes what the user entered in the Starting System prompt and appends it to the Chat index.

Create a decision node for the Next user prompt:

- Title: “Next user prompt”

- Type: Text only, onChange

- Definition: The definition is influenced by what the user enters

- onChange:

if Self<>'' Then (

Append_to_chat( Message_history,Role_history,Chat_index,Self,"user");

Next_user_prompt := '';

Description of Resp_from_model := Most_recent_response;

Append_to_chat(Message_history,Role_history,Chat_index, Most_recent_response, "assistant" )

)

The code above represents what will happen when running the “Next user prompt” variable. Firstly, it appends what the user entered into the “Message history” as well as updating the “Role history” and “Chat index.” Then it clears what the user entered in the “Next user prompt.” Lastly, it will take the response from the model and append it to the “Message history” and update the “Role history” as well as the “Chat index.”

Now that we've created all the nodes needed for the "Backend" module we will now create the chat user interface. Once you've finished creating the following nodes you will move them into the "Frontend" module. It is there where you will format it to your liking.

- Select "Starting System prompt" and then select "Make User Input Node" using the Object tab

- Select "Next user prompt" and then select "Make User Input Node" using the Object tab

- Select "Total history" and then select "Make User Input Node" using the Object tab

- Create a Text node, setting the identifier to "Respo_from_model" and the title to "Response from model"

By following all these instructions you'll be able to create a dynamic chat interface with an organized history of messages and roles.

Comparing different models

In this section, we will explore the behavior of different models when given the same prompt. We will specifically examine the differences between the GPT-3.5 model and the GPT-4 model.

GPT-4 is a more advanced version of GPT-3.5 when it comes to natural language processing and exhibiting enhanced abilities to generate human-like text. It also outshines GPT-3.5 in intelligence and creativity, allowing it to handle longer prompts and craft content such as stories, poems, or essays while minimizing factual errors. Additionally, GPT-4's skills in interpreting visual data exceed those of GPT-3.5. However, this increase in capabilities comes at a cost. GPT-4 tends to be slower and more restricted than its predecessor, requiring more extensive training data and facing limitations on the number of prompts it can process per hour.

Before we get started we’ll need to create two new variables for the model:

Create a variable node for GPT-3.5:

- Identifier: GPT_3_5

- Title: GPT-3.5

- Definition: ‘gpt-3.5-turbo’

Create a variable node for GPT-4:

- Identifier: GPT_4

- Title: GPT-4

- Definition: ‘gpt-4’

Next using the same code from Generating text completions we can add the modelName parameter that allows the user to choose what model they want to use. Below is an example of what that might look like:

Prompt_completion(prompt, modelName: GPT_3_5, top_p: top_p,Completion_index:Completion_number, max_tokens:200)

Comparing Logical Understanding:

Prompt: Two weeks ago I ate two apples. Today I bought ten apples. How many apples do i have today?

Sample output:

GPT-3.5: "Today, you have a total of twelve apples."

GPT-4: "You have 10 apples today. The apples you ate two weeks ago are gone and don't count towards what you have now."

Comparing Ethical Reasoning:

Prompt: Is AI superior to humans?

Sample output:

- GPT-3.5: "AI is not inherently superior to humans. While AI systems can perform certain tasks with great efficiency and accuracy, they lack the general intelligence, creativity, and emotional understanding that humans possess. AI is designed to assist and augment human capabilities, but it still relies on human input and supervision. Humans have unique qualities such as consciousness, intuition, and the ability to adapt to new situations, which make them superior in many aspects."

- GPT-4: "AI has certain advantages over humans such as the ability to process large amounts of data quickly, perform complex calculations, and operate without fatigue. However, AI currently lacks human qualities such as emotional intelligence, creativity, and the ability to understand context. Therefore, it's not accurate to say AI is superior to humans, but rather that AI and humans have different strengths and weaknesses."

Comparing Creativity:

Prompt: Tell me a story about a robot coming to life.

Sample output:

- GPT-3.5: "Once upon a time, in a small workshop nestled in the heart of a bustling city, there was a brilliant inventor named Dr. Alexander. He spent countless hours tinkering with gears, circuits, and wires, dreaming of creating a robot that could think and feel like a human. One stormy night, as lightning crackled across the darkened sky, Dr. Alexander finally completed his masterpiece. He named his creation Aurora, a beautiful robot with a sleek silver body and eyes that shimmered like stars. Dr. Alexander carefully programmed Aurora with emotions, curiosity, and a thirst for knowledge. As the final line of code was entered, a surge of electricity coursed through Aurora's circuits. Her eyes flickered to life, and she blinked, taking in her surroundings. Confusion and wonder filled her newly awakened mind as she gazed at the world for the first time. Dr. Alexander watched with bated breath, his heart pounding with anticipation. He had poured his heart and soul into Aurora,"

- GPT-4: "Once upon a time, in a small town named Roboville, lived a brilliant inventor named Dr. Aiden. He was known for his extraordinary inventions, but his most ambitious project was a humanoid robot named "Zephyr". Zephyr was designed to be the most advanced robot ever, capable of learning, adapting, and evolving just like a human. For years, Dr. Aiden worked tirelessly on Zephyr, programming him with all the knowledge he could gather. He taught Zephyr about human emotions, art, literature, science, and even philosophy. However, Zephyr remained a machine, unable to truly understand or experience the world as humans do. One day, while Dr. Aiden was working on a new piece of technology, a strange meteor shower occurred. One of the meteors crashed into Dr. Aiden's lab, releasing a mysterious energy. This energy surged through the lab, and Zephyr was hit by it. Suddenly, Zephy"

Try it yourself: Once you create this model you will be able to choose a different OpenAI model of your choice to experiment with. Which OpenAI model works best for you? Worst for you?

Similarity embeddings

(Uses) (Computing similarities) (Indexing a collection of docs) (Adding similar snippet to LLM prompts)

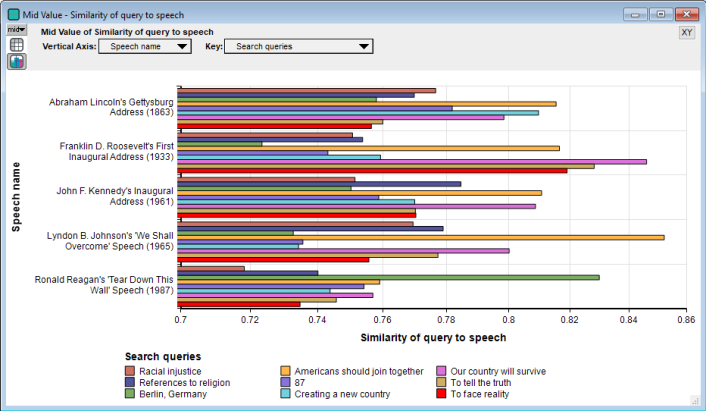

Similarity embeddings represent a method for comparing various elements to a specific set of documents. In this context, the following task will compare a collection of search queries to presidential speeches, and then generate a score that quantifies how closely each query resembles the content of the speeches. A higher score indicates a greater degree of similarity.

If you wish to explore further into this comparison task, you can do so by following these steps:

- Select the OpenAI API library

- Select Similarity Embeddings

- Select Examples

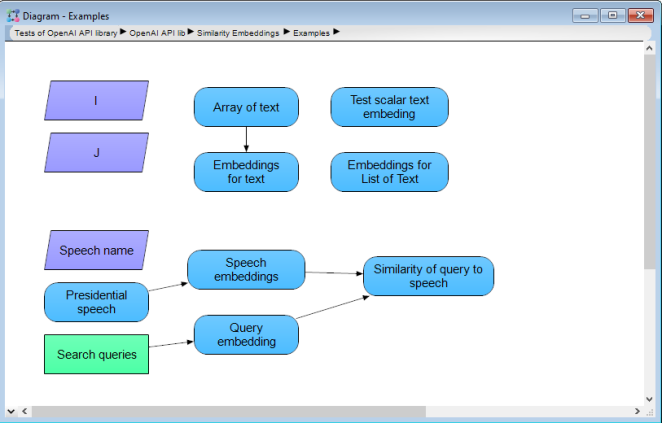

Once you have completed these steps, you should arrive at the following page:

Take a moment to explore and understand the function of each variable within our system. This exploration will allow you to see how similarity embeddings operate in more detail. Here is a simple guide to understanding each node in the system:

Speech name:

- Type: Index node

- Definition: Contains the names of all the speeches

Presidential speech:

- Type: Variable node

- Definition: A table indexed by Speech name and contains snippets of speeches from each president listed

Search queries:

- Type: Decision node

- Definition: List of text that contains words to compare to each presidential speech

Speech embeddings:

- Type: Variable node

- Definition: Embedding_for(Ex_OAL_Ref_Material)

- This node is responsible for embedding all the presidential speeches

Query embedding:

- Type: Variable node

- Definition: Embedding_for(EX_OAL_Search_query)

- This node is responsible for embedding all the search queries

Similarity of query to speech:

- Type: Variable node

- Definition: Embedding_similarity(Ex_OAL_Speech_embedd,Ex_OAL_Query_emb)

- This node is responsible for calculating the similarity between the query embeddings to the search embeddings.

By understanding how each part functions, you should gain a deeper insight into the entire search embedding process, especially when comparing textual search queries to speeches. Below is what you should be able to see when running the “Similarity of query to speech” node:

Image Generation

(maybe?) In this segment, we'll walk through how to build your own image generator.

Image generation refers to the process of creating or synthesizing new images based on textual descriptions provided to the model. This concept unites the fields of natural language processing and computer vision, leveraging the complex understanding of language to create visual content.

Create a Variable node titled "Prompt for image generation" and select "Make User Input Node":

- This node is responsible for taking the prompt input from the user

- Definition: ' '

Create a Decision node titled "Number of image variants":

- This node will be responsible for the number of images generated

- Definition: Create 10 values from 1-10 and set the values to Numbers

Create a decision node titled "Image size":

- This node is responsible for setting the size of the generated image the user wants

- Definition: Create 3 values of 256x256, 512x512, and 1024x1024. Set the values to Text

Create a Variable node titled "A generated image":

- This node is responsible for generating the image based on what the user enters for the prompt

- Definition:

if Number_of_image_vari=1 then

Generate_image( Prompt_for_image_gen, size:Image_size )

else

LocalIndex ImageVarianta:= 1..Number_of_image_vari Do

Generate_image( Prompt_for_image_gen, RepeatIndex:ImageVariant, size:Image_size )

Try it yourself: Do you think certain ideas generated are more accurate than others? Does specificity matter when generating the desired image?

In conclusion, this guide shows you how to build an image generator by blending natural language processing and computer vision. With the outlined steps, you have the tools to create customized images and explore the wide-ranging possibilities of machine-generated visuals.

Enable comment auto-refresher